NeurIPS'21

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

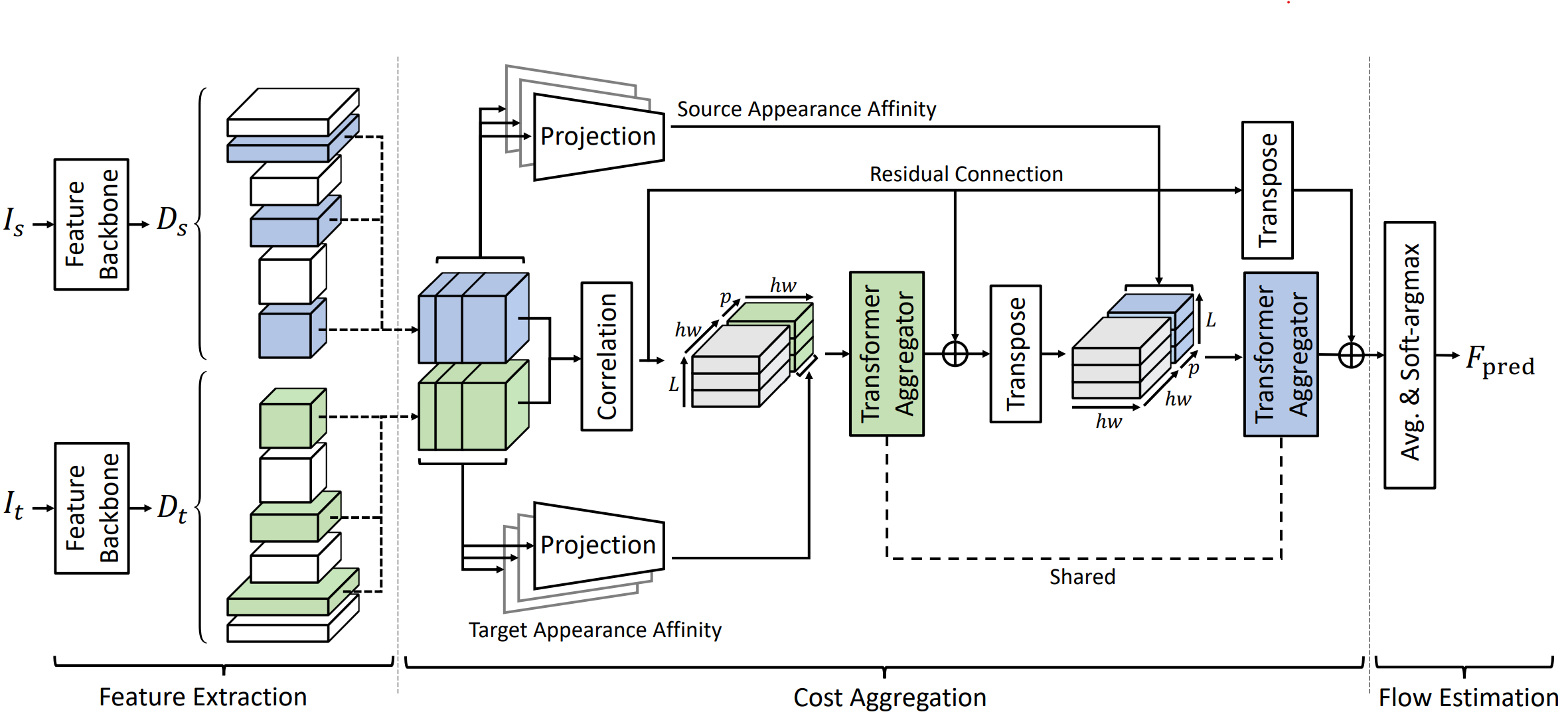

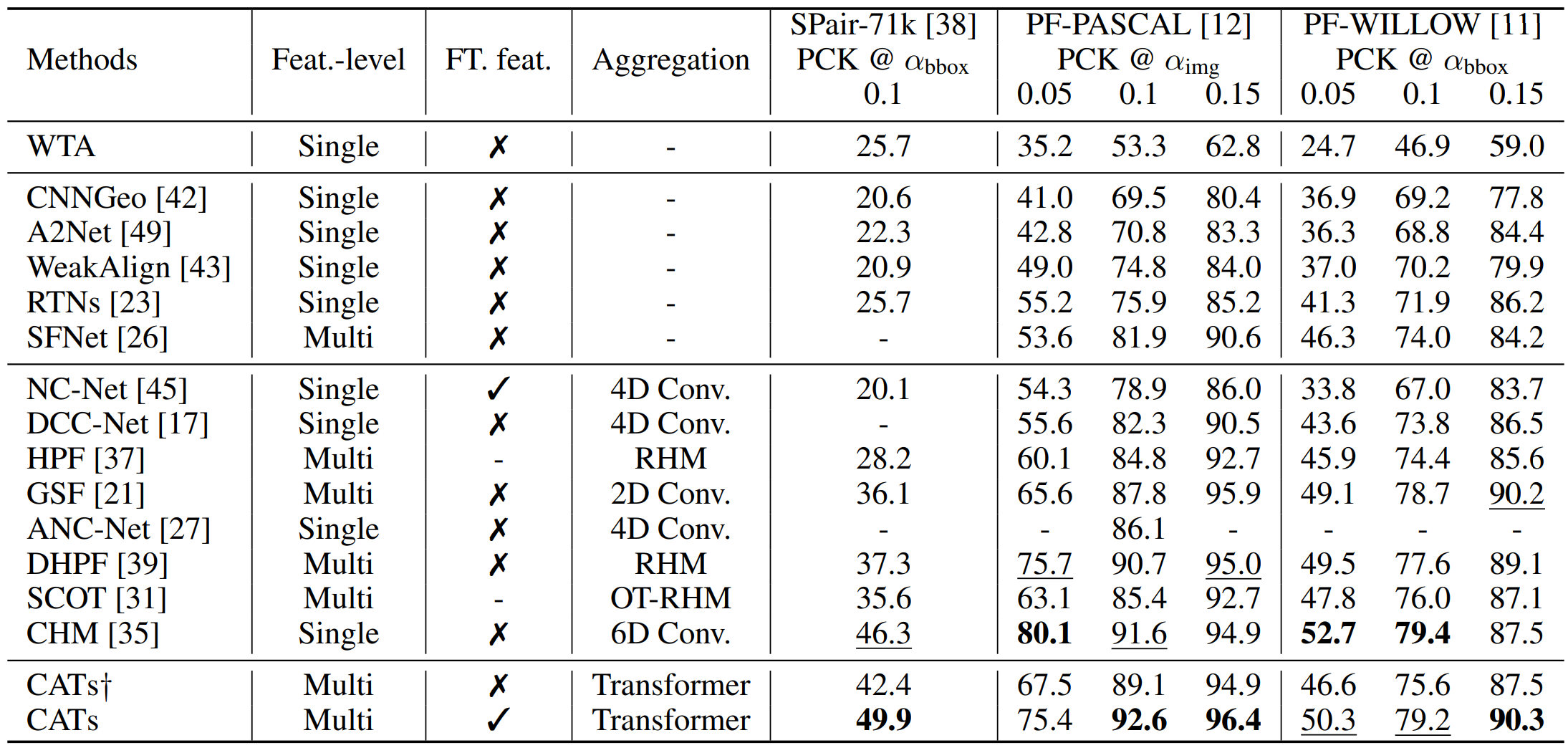

| We propose a novel cost aggregation network, called Cost Aggregation Transformers (CATs), to find dense correspondences between semantically similar images with additional challenges posed by large intra-class appearance and geometric variations. Cost aggregation is a highly important process in matching tasks, which the matching accuracy depends on the quality of its output. Compared to hand-crafted or CNN-based methods addressing the cost aggregation, in that either lacks robustness to severe deformations or inherit the limitation of CNNs that fail to discriminate incorrect matches due to limited receptive fields, CATs explore global consensus among initial correlation map with the help of some architectural designs that allow us to fully leverage self-attention mechanism. Specifically, we include appearance affinity modeling to aid the cost aggregation process in order to disambiguate the noisy initial correlation maps and propose multi-level aggregation to efficiently capture different semantics from hierarchical feature representations. We then combine with swapping self-attention technique and residual connections not only to enforce consistent matching, but also to ease the learning process, which we find that these result in an apparent performance boost. We conduct experiments to demonstrate the effectiveness of the proposed model over the latest methods and provide extensive ablation studies. |

Overall network architecture

|

| Figure 1. Our networks consist of feature extraction, cost aggregation, and flow estimation modules. We first extract multi-level dense features and construct a stack ofcorrelation maps. We then concatenate with embedded features and feed into the Transformer-basedcost aggregator to obtain a refined correlation map. The flow is then inferred from the refined map. |

Transformer Aggregator

|

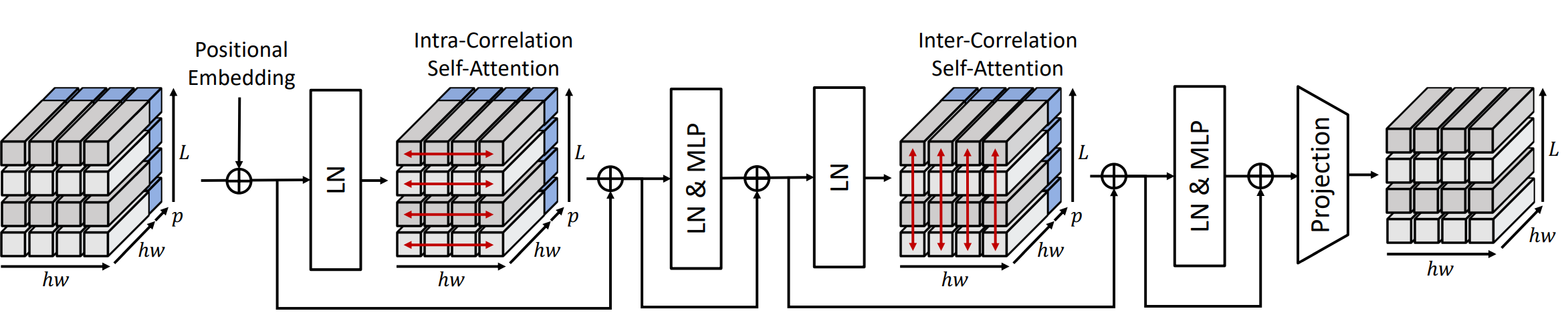

| Figure 2. Illustration of Transformer aggregator. Given correlation maps with projected features,Transformer aggregation consisting of intra- and inter-correlation self-attention with LN and MLPrefines the inputs not only across spatial domains but across levels. |

Experimental Results

|

| Table 1. Quantitative evaluation on standard benchmarks [38, 11, 12]. Higher PCK is better. The best results are in bold, and the second best results are underlined. CATs† means CATs without fine-tuning feature backbone. Feat.-level: Feature-level, FT. feat.: Fine-tune feature. |

|

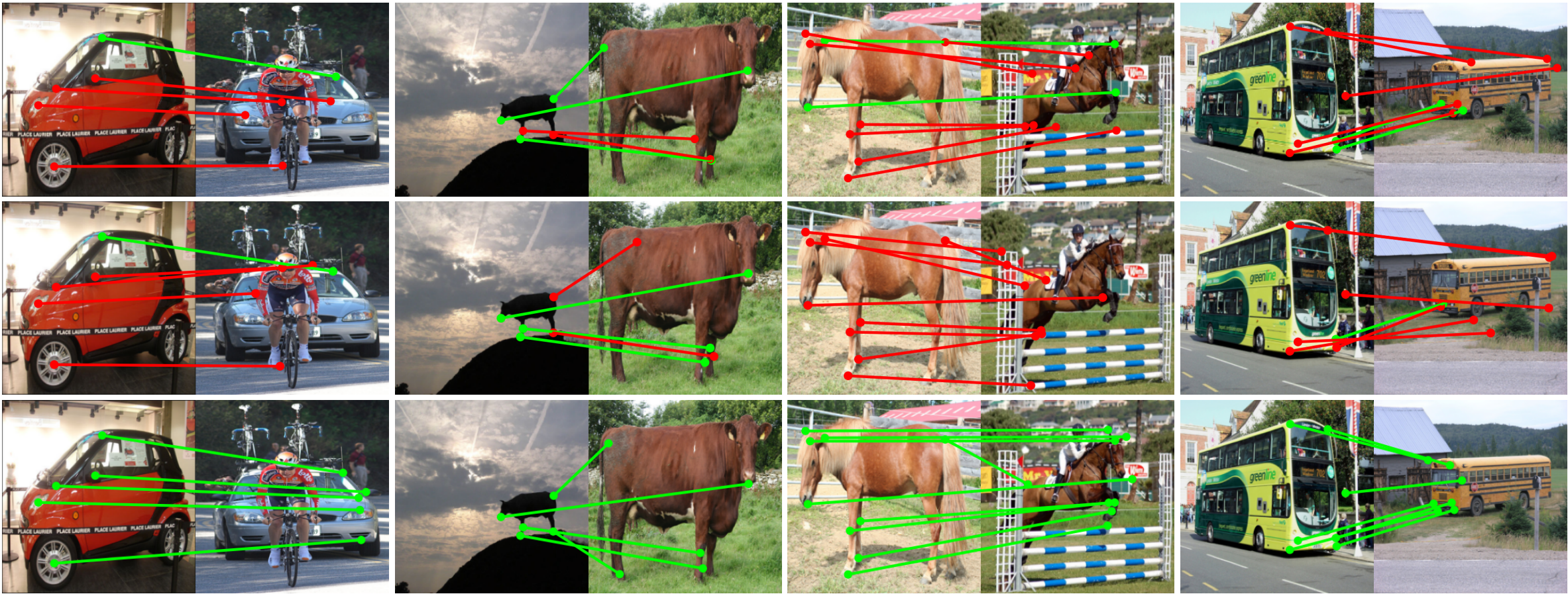

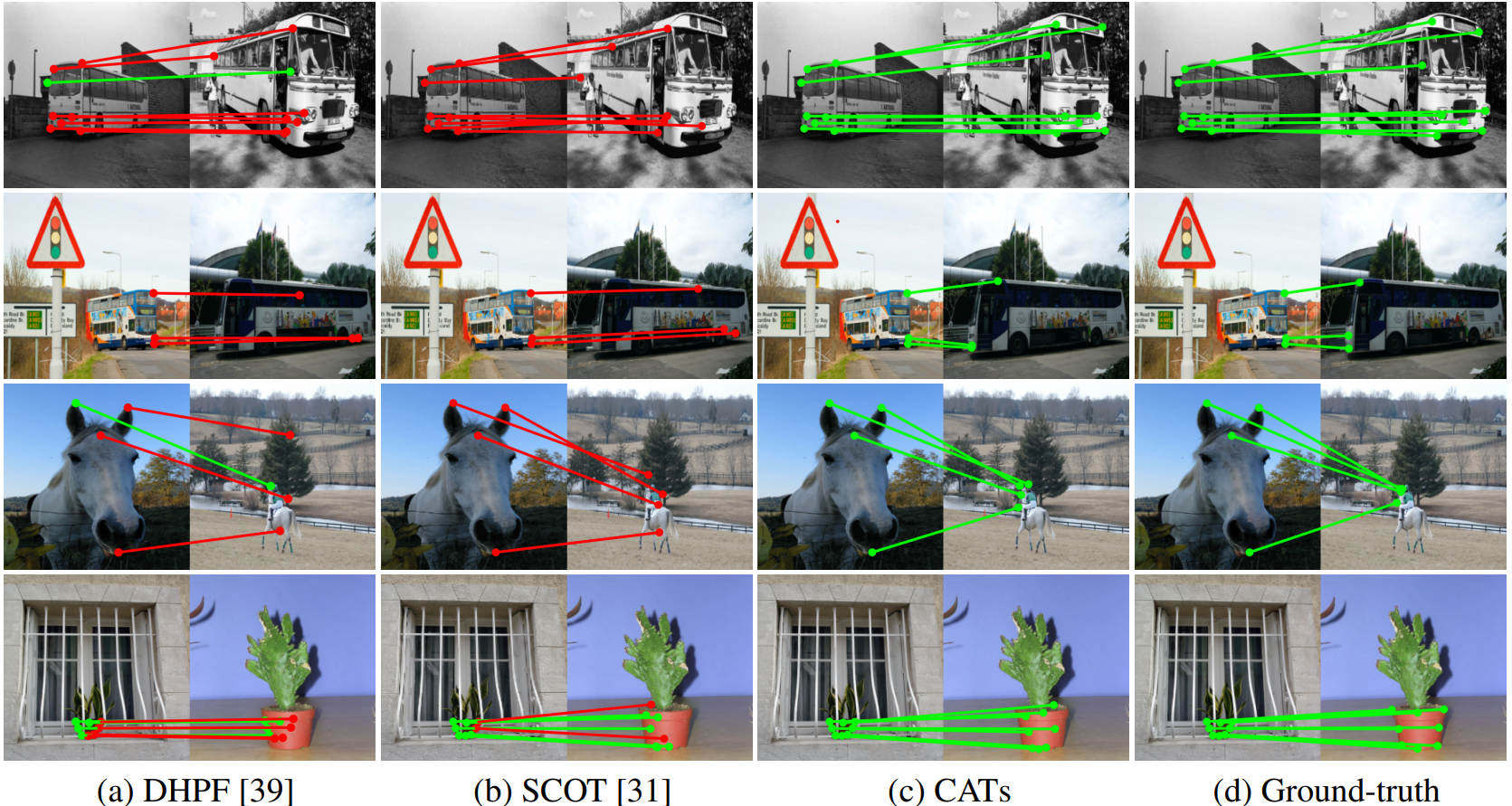

| Figure 3. Qualitative results on SPair-71k [38]: keypoints transfer results by (a) DHPF [39], (b) SCOT [31], and (c) CATs, and (d) ground-truth. Note that green and red line denotes correct and wrong prediction, respectively, with respect to the ground-truth. |

Visualizations

|

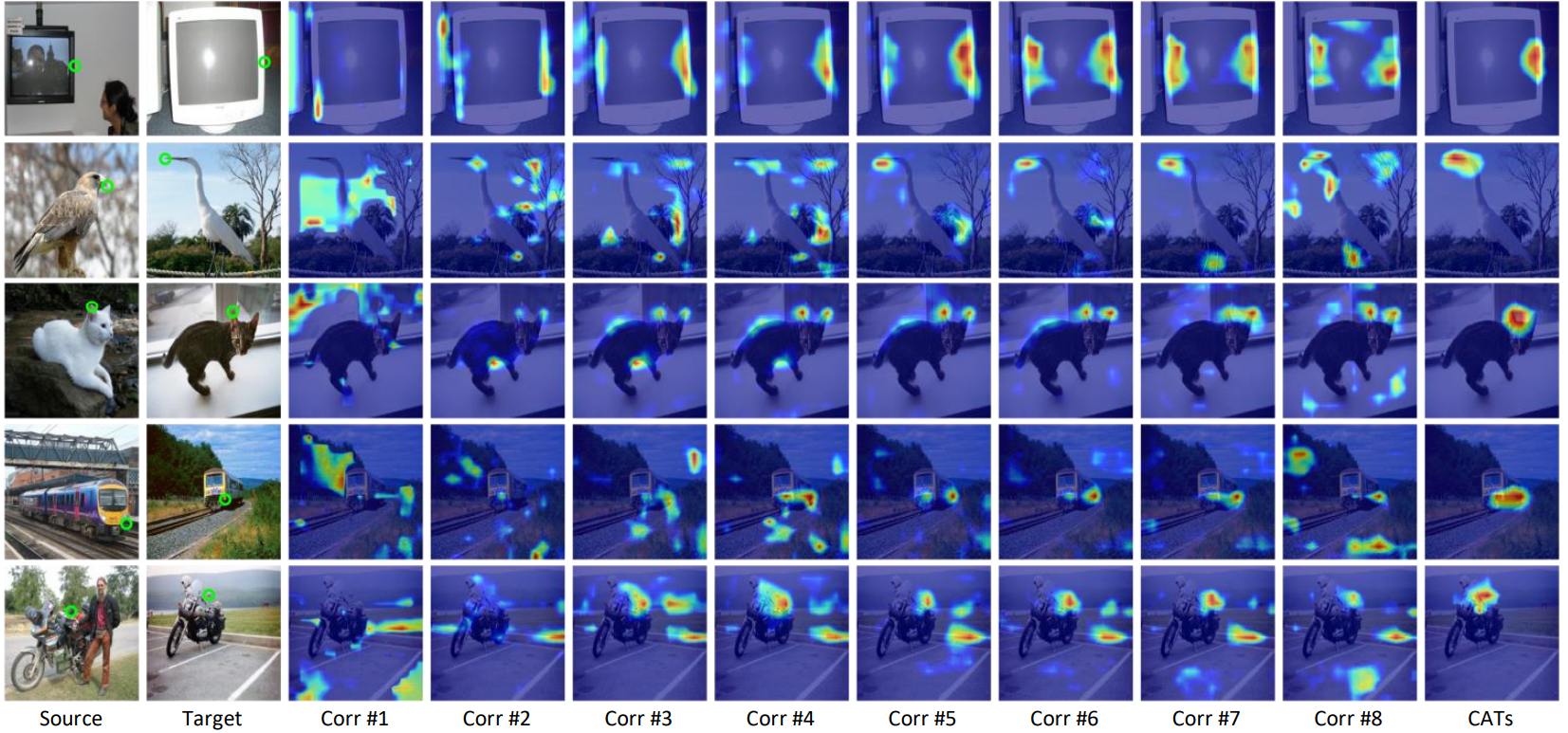

| Figure 4. Visualization of multi-level aggregation. Each correlation refers to one of the (0,8,20,21,26,28,29,30) layers of ResNet-101, and our proposed method successfully aggregates the multi-level correlation maps. |

|

S. Cho*, S. Hong*, S. Jeon, Y. Lee, K. Sohn, S. Kim CATs: Cost Aggregation Transformers for Visual Correspondence In NeurIPS, 2021. (hosted on ArXiv) |

Acknowledgements |